PostgreSQL 18 is such an exceptional piece of software - it is hard to imagine anything better, right? I remember a similar thought when PostgreSQL 7 was released (“wow, how cool is that - this is it”). Well, let me put it mildly: I was wrong, and things are a lot better than they used to be. So, obviously the idea that PostgreSQL 18 is as far as humanity can go is also wrong. But what might be next?

Table of Contents

The question is: While a major step forward has been made in PostgreSQL 18, and an important milestone has been achieved … is there anything out there that is even cooler? Something we might see in a couple of years? Well, maybe: I am of course talking about “Direct I/O”.

Under normal circumstances, a read works like this:

Sounds good? Well it is, and in most cases this is exactly what we want. However, there is an additional method: Direct IO. What it does can be summed up in one sentence: DirectIO bypasses the OS page cache layer.

But let us take a look at more detail: The good part is that it skips the overhead and scalability limitations. The bad part is that it skips the services that layer provides. The obvious thing is the extra cache it provides. That can somewhat be worked around by increasing shared buffers, but the OS is able to dynamically trade cache space for backend allocated memory. Shared buffers must be allocated pessimistically.

Then, there is OS readahead. Version 18 has ReadStream, which can use async IO to do readahead, and which has been implemented for sequential scans, bitmap scans and VACUUM. But not for normal index scans. Currently, if an index range scan happens to read data sequentially (index order approximately matches table order) then there is a major performance difference as page cache readahead would do prefetching, but direct IO only submits each read as it is needed.

Finally, page cache does write buffering, allowing many writes to be in flight at the same time. Async IO doesn't support writes yet.

Before we dive into the benchmark and the greatness of Direct I/O: There is no full implementation of this in PostgreSQL and there won’t be for many years to come - However, what there is, is a DEBUG (!) feature that uses direct I/O. Please DO NOT use this in production. Keep in mind: Even if you have faith in our blog and our expertise here at CYBERTEC, this is not advice to use Direct I/O in PostgreSQL at this stage, just a test to show what will be possible in the future and what potential there is.

For my test, I am using two tables that look like this:

|

1 |

bench=# \d t_part_0<br /> Table "public.t_part_0"<br /> Column | Type | Collation | Nullable | Default <br />----------+---------------+-----------+----------+---------<br /> aid | bigint | | not null | <br /> bid | integer | | | <br /> abalance | integer | | | <br /> filler | character(84) | | | |

Each table is populated with 250 GB of data.

Note that I am using TWO SSDs here - each table is on a separate SSD so that we can see the maximum throughput possible.

PostgreSQL is configured as follows:

|

1 |

max_parallel_workers = 16<br />max_worker_processes = 16<br />max_parallel_workers_per_gather = 16<br />io_method = io_uring |

Those parameters are all set up for a “maximum speed parallel sequential scan”. Let us run a simple SELECT count(*) to see what happens - this is the interesting part:

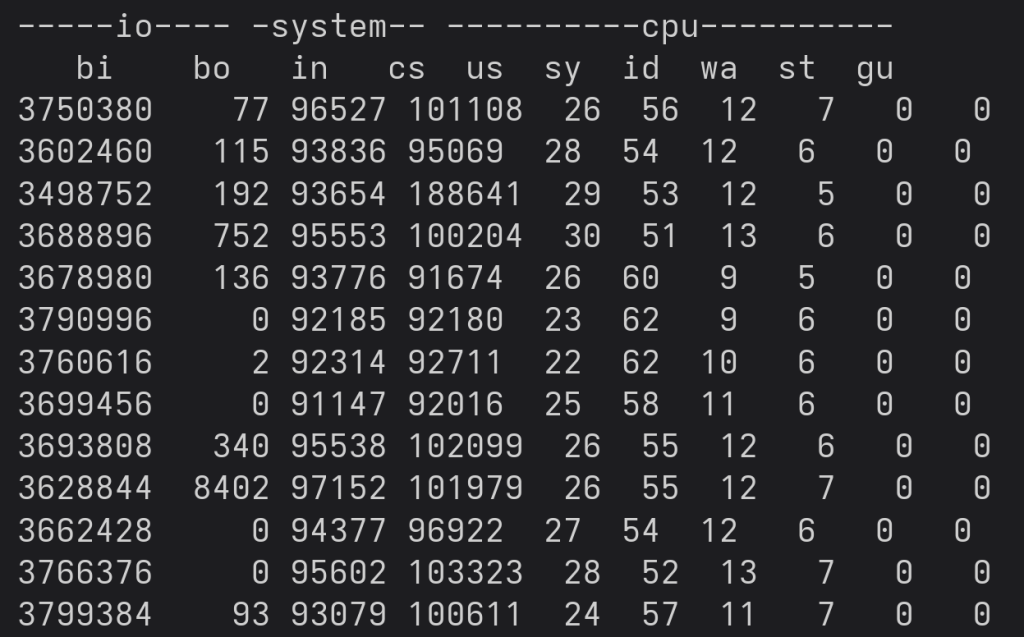

What you see is the output of vmstat on my local Linux machine. The “bi” column that we are reading at around 3.7 GB / sec from our 2 SSDs (combined). Sounds cool? Well, I am not so sure … let us inspect some important columns: CPU and disk wait. The CPU usage is around 50-60% and disk wait is not too high. This tells me that we have a good chance to achieve more throughput.

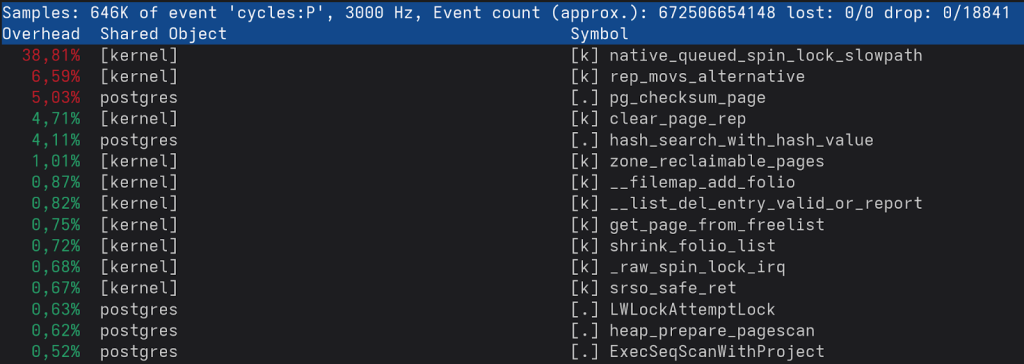

I changed some configuration parameters on PostgreSQL and checked some other stuff but finally tried my favorite brute force method: “perf”

Oh oh, it seems we are facing a fair amount of contention down in the kernel (side note: This is a 16 core physical AMD chip - not a virtual machine, no Kubernetes, etc.). I tried this with various SSDs with various numbers of partitions, but it always seems to peak out around the same speed, indicating that we are indeed starting to hit a layer PostgreSQL relies on. By the way: If you use standard I/O with PostgreSQL (as in PostgreSQL 17 and older) you will see those numbers only using a high number of worker processes).

In PostgreSQL, Direct I/O is not the way we do I/O because we do want the layer of protection the operating system gives us - this, of course, might change in the future. But, there is a debug variable that does use Direct I/O for reads. Here is how it works:

|

1 |

debug_io_direct = 'data' |

Restart PostgreSQL and run your big sequential scan again.

And oh boy, let us have a look …

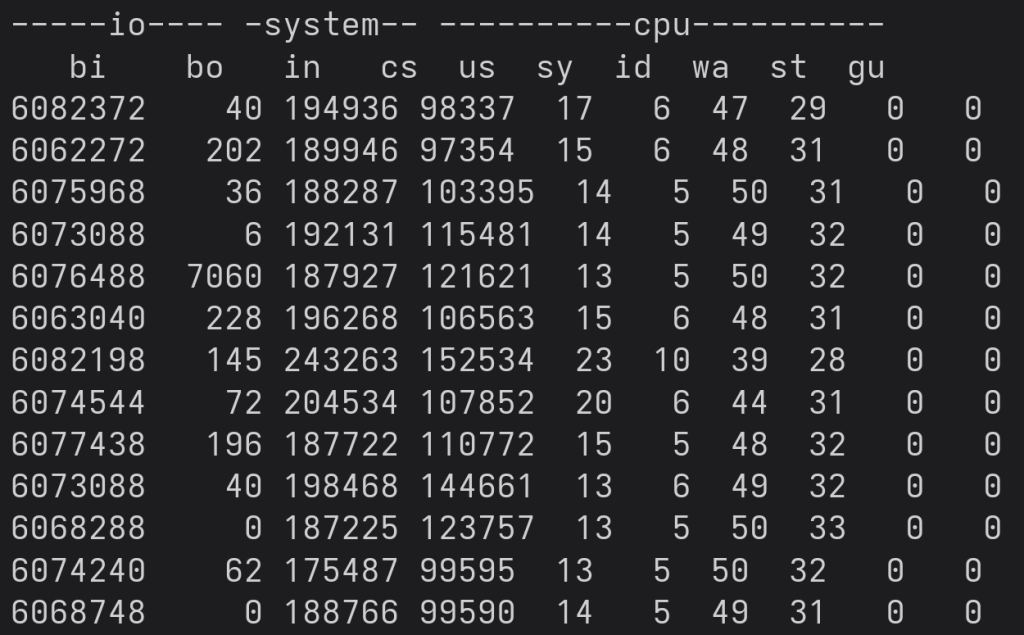

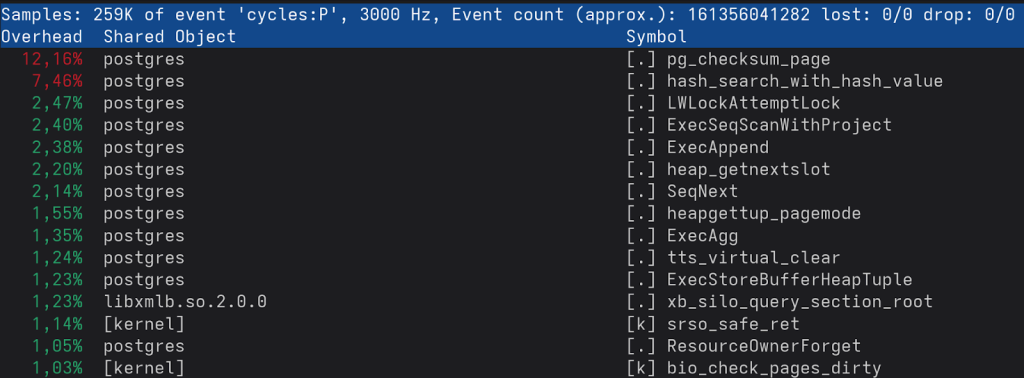

We are now reading at 6 GB / second (and aren't using much CPU). By skipping all the sanity on the OS level, we managed to improve speed. But there is more - here is what “perf” has to say:

The most time-consuming part here is already inside PostgreSQL - the kernel is nowhere to be seen anymore. The checksum function has been written by one of our most brilliant guys and I can assure you that this code is extremely fast.

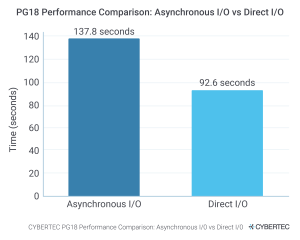

Obviously, disk I/O significantly impacts query times: The following chart

We will publish more benchmarks fairly soon when additional fast local NVMEs arrive (normal SSDs don’t do the job).

Note that this post is simply an outlook on what might be possible in the future and what can be done on fairly common modern hardware. Hardware speed is ever increasing, so it is obviously important to see and understand where the next bottlenecks will be and what can be done to predict and avoid them. Historically, disk I/O has always been one of the core bottlenecks in database technology, but we shall see what the future brings. Maybe there will be a time some day when things change. CYBERTEC will be here to witness those developments.

You are currently viewing a placeholder content from Turnstile. To access the actual content, click the button below. Please note that doing so will share data with third-party providers.

More Information

Leave a Reply